Learning Real-World Robot Policies by Dreaming

|

AJ Piergiovanni

|

Alan Wu

|

Michael S. Ryoo

|

|

School of Informatics, Computing, and Engineering

Indiana University Bloomington

|

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), November 2019

[arXiv: 1805.07813]

Abstract

Learning to control robots directly based on images is a primary challenge in robotics. However, many existing reinforcement learning approaches require iteratively obtaining millions of robot samples to learn a policy, which can take significant time. In this paper, we focus on learning a realistic world model capturing the dynamics of scene changes conditioned on robot actions. Our dreaming model can emulate samples equivalent to a sequence of images from the actual environment, technically by learning an action-conditioned future representation/scene regressor. This allows the agent to learn action policies (i.e., visuomotor policies) by interacting with the dreaming model rather than the real-world. We experimentally confirm that our dreaming model enables robot learning of policies that transfer to the real-world.

Example Dream Sequences

We trained our model to to explicitly reconstruct realistic future images. Here are some example dream sequences for the 'approach' task:

Note that none of these are in any of the training data. The model has never seen these scenes before. The model generated these images from random samples, yet due to the training of the dreaming model, they still appear quite realistic. We showed that using these unseen trajectories results in a better learned policy and transfers to the real-world environment.

Some example dream sequences for the 'avoid' task:

Results

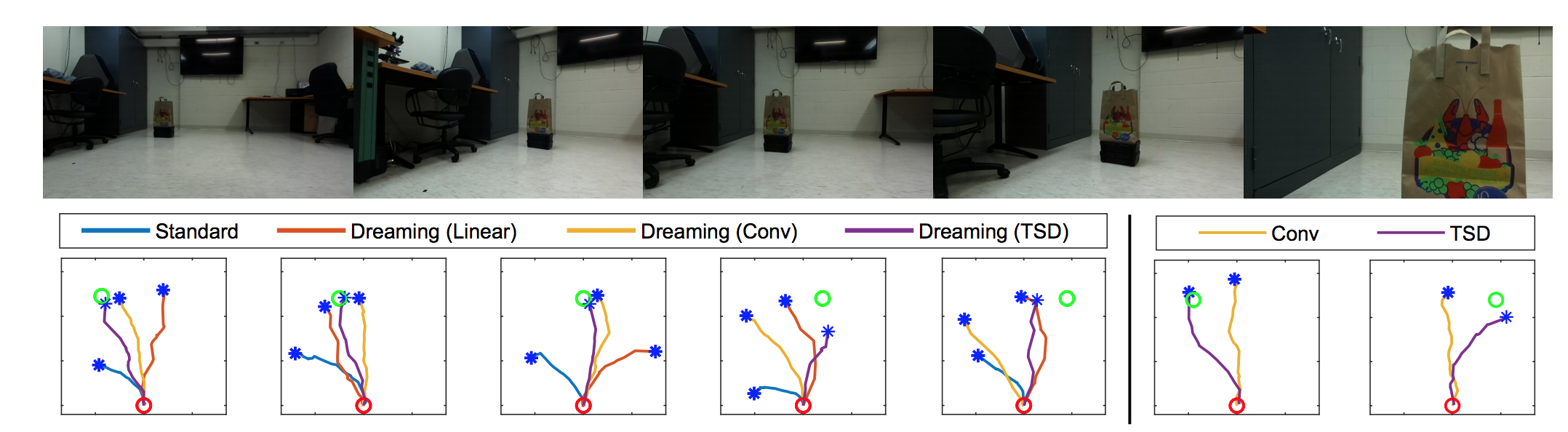

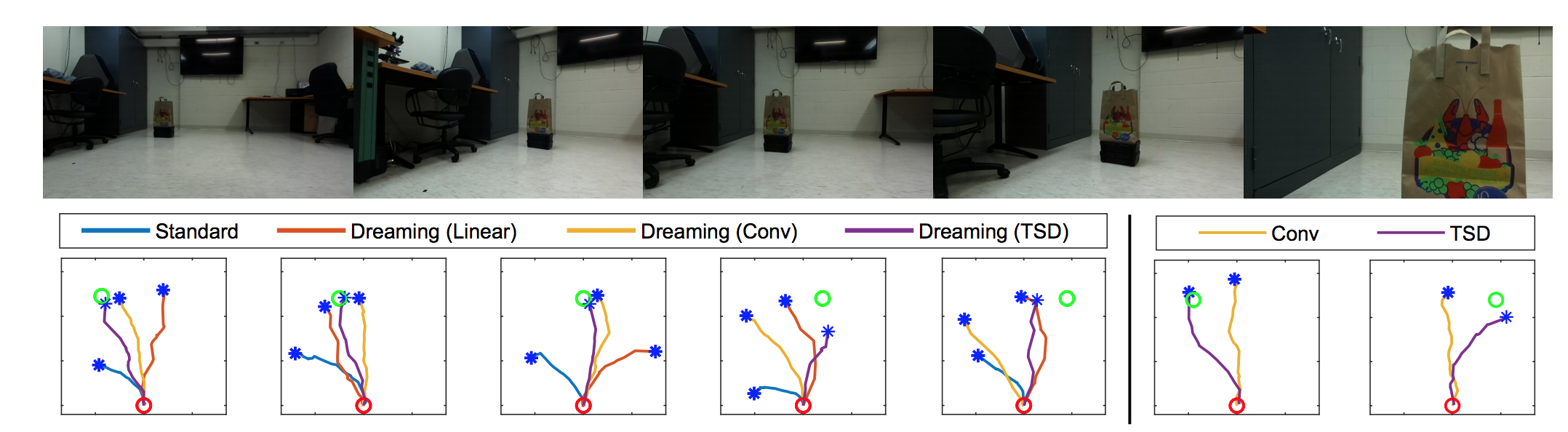

Example trajectories from the real-world robot. The policy was learned entirely in the 'dream' and applied to the real-world environment.

Top: real-world robot frames. Bottom: trajectories of different models during the `approach' task for seen (bottom left) and unseen (bottom right) targets.